A 3-part series on Zola & the future of context-aware AI in South Africa

Part 1: On Origins, Vision, and Positioning

There's a young person in South Africa who finishes a skills training course, job readiness workshop, or youth development intervention and hears nothing back. No follow-up. No call. No clear next step. Just silence.

From what I’ve gathered in my conversation with Jason, a conversation I’m bringing you into through this series, that silence was treated as the starting point. Not a problem to solve, but a condition to design around.

This week, I'm stepping away from my usual Wednesday AI reflections to take you behind the scenes of an AI experiment unfolding in South Africa. At the center of this conversation is Zola, “an AI-powered assistant, but not your typical chatbot. It’s built for the youth development ecosystem in South Africa. Think of Zola as a digital companion for youth organisations and the young people they serve, helping them access the right tools, knowledge, and opportunities at the right time.” Jason explained.

Built on Silicon Valley’s tech by Jason and his team at Capacitate, Zola is shaped by local knowledge, Ubuntu values, and a very specific use case: what happens when care systems go quiet.

“We’re using that very same tech ourselves,” Jason explained: “world-class LLMs like Meta’s LLaMA 3, OpenAI’s models, and Google's infrastructure form the foundation of Zola’s engine. We’ve taken the power of Silicon Valley’s language models and fused it with a curated, community-rooted knowledge base, built from real questions, real programmes, and real needs in the South African youth development space. Zola gives us the best of both worlds: global AI capacity, infused with local wisdom.”

The team has agreed to share their process publicly over the next three weeks, a rare glimpse into how values show up in design choices. This matters because AI is arriving everywhere, but it's not arriving equally because it’s being shaped in places far removed from the communities it affects. The assumptions, values, and incentives baked into these systems rarely reflect the lived realities of people outside a few major tech hubs.

That's why I'm paying attention to projects like this, not because they're polished or proven, but because they're trying something different. They're asking whether AI can be useful even though it’s built on extractive and exploitative practices. Can AI serve real needs without flattening complexity?

This series is a chance to slow down and observe one such attempt. To sit with the tradeoffs, the philosophy, and the gaps. In this is Part 1 of 3, we will deep dive into where the work began, what it's trying to address, and the early attempts to define what community-centered AI might look like.

The Problem They're Solving

The context is the South African youth development space. This means the programmes and interventions aimed at improving the socio-economic well-being of South African Youth. In most cases, a young person would finish a skills course, a few financial literacy sessions, or a short-term placement, and then that's it. No follow-up. No bridge to what comes next. Meanwhile, the small organizations trying to support them are dealing with their own limitations: underfunded, under-resourced, and often disconnected from the very systems they're meant to work within.

When I asked Jason what problem they were trying to solve, he started with this familiar gap, the kind that shows up quietly after a programme or intervention ends.

“We created Zola because existing AI tools are mostly built for commercial use," Jason told me. "They're great at selling things or answering generic questions. But they don't understand our context. They don't know what it means when a young person exits a programme with no follow-up support. And they definitely don't help a small grassroots organisation figure out where to find funding or how to design a safe, inclusive youth programme."

“These tools don't know what youth services exist in a specific township, or how local funding systems work, or what tone you need when talking to a pregnant teenager trying to stay in school. Most importantly, they aren't designed to care about any of that. Zola was created as a response to that kind of silence.” He added.

“Zola exists to bridge that gap, to bring the principles of Ubuntu: shared humanity, mutual care, connection into the digital space. It’s AI with a conscience, grounded in local relevance and community service.” Jason continued, as he described what Zola is.

In other words, they started with a question: Could an AI tool support people in a way that makes sense here? Whether or not that’s possible or sustainable is still unclear. But that’s the bet they’re making: that a different kind of intelligence might be possible, even if it’s built on top of systems that weren’t designed for care in the first place.

Three Ways to Think About Zola

As a Tool: At the surface, Zola is an AI assistant built for South Africa's youth development ecosystem. It's embedded in platforms already used by youth and civil society groups. It can surface information about funding, safeguarding, job opportunities, mental health support. Technically, it's powered by mainstream large language models with guardrails and custom prompts layered on top.

As a Response: Jason describes Zola in terms of absence, the kind that appears when a programme ends and nothing follows. Or when a youth worker doesn't know where to refer someone. Or when a small grassroots organization can't access the tools to build something safe. Jason is also careful not to frame Zola as an answer to every need. "Zola is not designed to replace counsellors, social workers, or community-based practitioners," he told me. "It's here to support them. To create more access, not take over their roles."

That distinction shapes how the agents speak, what they avoid, and how they're configured to handle risk. When a user brings up something serious like violence, self-harm, or trauma, Zola doesn't engage in therapeutic dialogue. It redirects. It points to resources. It gets out of the way. "Zola knows what it can and can't engage on," Jason said. "There are clear red lines. We've embedded moderation rules and guardrails into the AI's design, so it knows how to triage appropriately, when to refer out, and when not to respond at all. For complex or sensitive issues like suicide, abuse, or trauma, the agent doesn’t attempt to counsel, it immediately redirects to qualified, verified service providers."

The boundaries are as much technical as they are ethical. Zola is positioned as a triage tool, not a therapist, not a diagnosis engine. "It listens, helps the user articulate their need, and then guides them to the most relevant, local service or opportunity.” Jason added, “ It provides consistent, accessible information and direction, not deep psychosocial care. That work still requires people. And we honour that." So, Zola doesn't claim to be intelligent in any grand sense. It holds a small slice of responsibility and stays within it.

As a Question: Jason and his team are under no illusions about the risks involved. “We absolutely expect and welcome criticism about the risk of replacing the human touch. There’s always risk in innovation,” he told me. “But the far greater risk is doing nothing and allowing the social sector to fall further behind while others leap ahead. Failure isn’t just possible; it’s part of the process. Every mistake is an opportunity to learn, adapt, and build better.”

He is also clear about the boundaries: “Zola doesn’t replace humans, it connects them. It doesn’t diagnose, it refers. And decisions about what Zola can and can’t do aren’t made in isolation, they’re guided by ongoing input from practitioners, communities, and ethical review. If we don’t explore how to responsibly use this technology,” he said, “someone else will without our values, our context, or our care.”

So the question Zola is asking is a values test. It’s presented in the form of a system, but what it challenges is how we design for trust, without pretending to know everything. When I asked how the system decides how to speak, how it chooses tone, persona and presence, Jason explained that “the team creates a detailed persona for each agent to guide the way it responds.” However, “there are limitations in the formulation of the persona,” he noted, “because we are still using the existing LLM capabilities and foundational training models and data.. It is a process of continuously refining the agent persona and the team actively considers the local context and setting. We have drawn on our collective sector experience and input from some of our youth development partners to define the agent personas.”

The question remains open not just for the team, but for the rest of us. Zola is obviously not asking whether AI can help. It’s asking what kind of help we’re willing to accept when care is stretched thin. It’s testing whether it’s possible to embed intelligence into support systems without flattening the people those systems serve.

If a tool like Zola becomes embedded in public services, youth programmes, and education spaces, who decides how it speaks? What does it know? What it never says? And more urgently: what happens if we ignore those questions, and someone else answers them first?

What Makes This Different

If someone asks why not just use ChatGPT, Jason's answer is direct: "It's a fair question. ChatGPT is more and more accessible, no doubt. But Zola is purpose-built for a completely different use case."

Three key differences:

First, "Zola has training on a dynamically curated, localised knowledge base specific to South African youth and youth-serving organisations." Earlier in our conversation, Jason explained that "The YDColab has been busy with a rolling mapping process to identify civil society organisations that provide services for youth specifically. This draws on their member base of 150+ organisations but also on an active community mapping process where the team finds and verifies organisations in communities that support youth. This involves confirming the organisation details and the services that they offer. Zola then indexes and ingests this directory to be able to provide location-specific information to youth."

So Zola "doesn't give generalised advice, it gives relevant, verified, and context-aware responses, based on real resources, active service directories, funding tools, and organisational support content."

Second, "Zola also has a curated persona: it's not a general-purpose assistant, it's a trusted guide, built with a tone and style designed specifically for youth empowerment and ecosystem support. It reflects the values of Ubuntu support, dignity, and connection."

Third, "Zola will be embedded where it matters, directly inside platforms like Yubuntu Connect. That means no separate app, no confusing logins, no barriers. Just immediate, mobile-friendly, locally relevant support, right where young people and organisations already are."

Zola in Context

It becomes clear that Zola, doesn’t exist on its own. It’s one part of a growing system of digital agents designed to operate within platforms already used by South African youth and the organisations that support them. This includes both CDE’s ongoing work, and Yubuntu, a multi-platform initiative with two distinct components:

A Community of Practice (CoP) for youth development practitioners, operated by YDColab

A national alumni platform that helps organisations stay connected to youth post-programme and support them toward their next step

In these spaces, Zola takes on different forms.

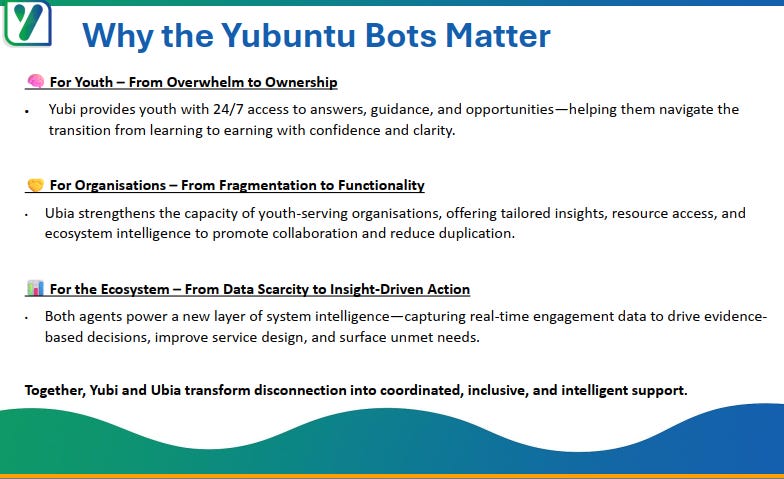

Yubi

Yubi is the agent built for young people. It offers chat-based guidance on things like CVs, job readiness, and navigating transitions from school or training into work. It draws from a curated knowledge base and provides access to nearby services, funding opportunities, and mental health support. The goal is to make that moment of “what now?” feel less overwhelming.

Ubia

Ubia is aimed at organisations. It supports capacity-building: monitoring and evaluation, programme design, safeguarding, and funding tools. It’s less of a chatbot and more of an embedded layer, surfacing intelligence across the ecosystem and helping organisations avoid working in silos.

But the infrastructure extends beyond individual agents. The team is also preparing to launch Impact Manager, a multi-tenant digital platform that brings these agents, tools, and systems together. Organisations will be able to deploy their own AI agents, manage curated knowledge bases, collect and visualise field data (online or offline), send targeted messages via push or WhatsApp, and even manage disbursements like stipends or task-based payments.

Behind this infrastructure is a verification process that ensures the data Zola draws from is accurate, a critical safeguard against hallucinations or rather confabulations, that could misdirect someone in need. "Currently, this is a process of directly reaching out to the organisation through a youth call centre to confirm all their details. In the future, we plan to extend this verification to the Community Digital Enabler network that can provide direct on-the-ground verification with GPS coordinates," Jason explained.

The scope of what they're connecting becomes even clearer when you consider the scale. This system will also connect to the National Youth Explorer database. As Jason described: "Nothing much changes for the agent or the users, it just expands the range of data that the agent can draw on to provide a location-specific response. Youth Explorer has thousands of public service points in the database, we provide an API for Zola to be able to access that database and find additional service information."

Jason describes Impact Manager as part of their platform cooperative approach:

“Impact Manager is Capacitate’s next-generation digital platform and AI-enhanced toolkit,” he told me, “designed to make monitoring, evaluation, reporting, and learning faster, smarter, and more inclusive for impact organisations. It brings together human insight, digital tools, and AI agents into one secure and accessible ecosystem, helping civil society organisations, funders, and social enterprises better track, analyse, learn, and communicate impact. It will also allow organisations to curate their own knowledge bases and deploy client or beneficiary-facing Agents onto existing digital platforms quickly and easy.” He added ,“The impact manager roadmap includes:

Internal AI Agent deployment to have conversations with your own documents and databases

External AI Agent deployment – to allow clients and beneficiaries to have a conversation with a curated knowledge base and databases

Online and Offline field data collection and visualization

Field notification and communication using push notifications and WhatsApp

Disbursements – managing direct stipend payments and task payments as an adhoc process or on a scheduled basis with immediate settlement.”

It seems, the idea is not to centralise control, but to enable organisations to operate their own tailored, secure digital environments with AI as one part of a broader support infrastructure.

None of this is static. The infrastructure is evolving. The claims are careful. But clearly, what’s being built is a layered attempt to build AI into the structure of care and coordination, embedded where people already are, instead of asking them to adapt to yet another new system.

A Sovereign Layer

On African sovereignty, where these discussions intersect with technological independence and socially-driven innovation across the continent, Jason said: "The idea of African AI sovereignty is about more than just owning the servers or coding the models, it's about ensuring that the intelligence we use reflects our realities, our values, and our people. Yes, Zola uses existing large language models like Meta's LLaMA 3 to power its conversational capabilities. But the knowledge it draws from isn't embedded in the foundational model, it's kept separate, in a curated, community-driven knowledge base. This separation is crucial. It allows us to leverage the power of Silicon Valley's tech while protecting the integrity of African knowledge, experience, and service ecosystems. Our content isn't being scraped, harvested, or assimilated into global models, it stays ours, updated by us, for our communities."

He continued: “Zola is part of a broader movement for socially-driven innovation in Africa, where tech is not just localised, but locally governed, context-sensitive, and rooted in principles like Ubuntu. It’s a practical step toward technological independence, without waiting to reinvent the LLM wheel. We’re not building AI in Africa to compete with the West, we’re building it to serve Africa better.”

Ubuntu in Practice

At the heart of this is a design logic shaped by Ubuntu not as a metaphor, but as practice: shared humanity, mutual care, collective responsibility.

"When we say community-centered AI, we mean AI that is built with, for, and around the needs of real people in real communities not generic users, not global markets, but youth in South Africa and the organizations that support them," Jason explained.

This shows up in tone as much as function. Zola was designed to sound respectful, steady, and "humble." So, it doesn't posture or pretend to know more than it does. It's AI designed to behave like it belongs to someone who cares what happens next, in other words it does qualify as Responsible AI. "We often talk about community-centred AI. But for us, it means something specific. It means asking: how do we make sure this tool extends the reach of real people, instead of replacing them? How do we keep it relational, not extractive?" Jason added.

Zola isn’t hypothetical

It’s beginning to take shape inside real platforms, including a soft launch with Edunova this coming Friday, where it’s being tested in live environments and quietly integrated into youth development systems already in motion.

The infrastructure is still evolving. The boundaries are still being tested.

This first part has been about intention: what the team set out to build, and the values they're trying to protect. Next Wednesday, we'll look beneath that intent, diving into the infrastructure questions, safety architecture, ethical framework, quality control, and platform cooperative model.

In the meantime, I'm curious what you see from the outside. If something like this were embedded in your world, not as a new app, but inside the systems you already use, what would you want it to understand? And where would you want it to pause?

What are your thoughts on this approach? How do we balance innovation with care? Hit reply and let Jason and his team know what resonated, what challenged you, or what questions this raised.

Until next time!

Join the mission

This newsletter is independently researched, community-rooted, and crafted with care. Its mission is to break down walls of complexity and exclusion in tech, AI, and data to build bridges that amplify African innovation for global audiences.

It highlights how these solutions serve the communities where they're developed, while offering insights for innovators around the world.

If this mission resonates with you, here are ways to help sustain this work:

📩Become a partner or sponsor of future issues → reambaya@outlook.com

→ 🎁Every child deserves to be data literate. Grab a copy of my daughter's data literacy children's book, created with care to spark curiosity and critical thinking in young minds. (Click the image below to get your copy!)

You can also sponsor a copy for a child who needs it most or nominate a recipient to receive their copy. Click here to nominate or sponsor.

→ 🧃Fuel the next story with a one-time contribution. Click the image below to buy me a coffee (though I'd prefer a cup of hot chocolate!)

These stories won't tell themselves, and mainstream tech media isn't rushing to cover them. Help ensure these voices reach the audience they deserve.

Let’s signal what matters together.

Really interesting. Who decides what Zola shouldn’t say? That’s such an important question.